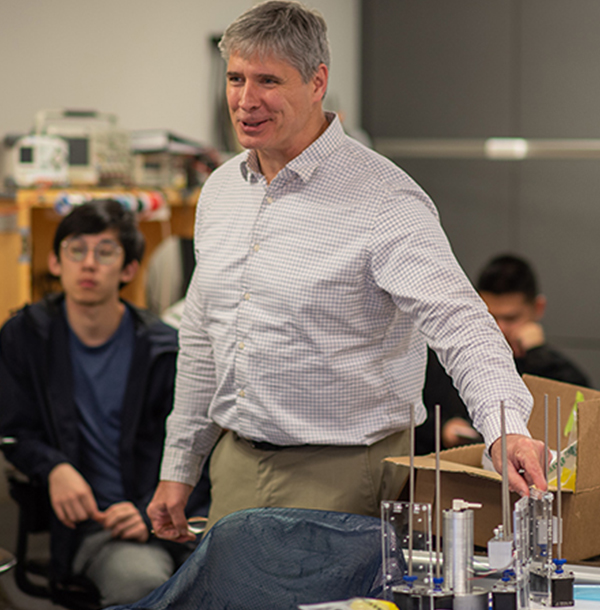

Breakthroughs in Science & Technology Kevin Lynch

Kevin Lynch, Ph.D., is Professor and Chair of Mechanical Engineering and Director of the Center for Robotics and Biosystems at Northwestern University. He is a recipient of Northwestern’s Professorship of Teaching Excellence and the Northwestern Teacher of the Year award in engineering. He received his B.S.E. with honors from Princeton University, and doctorate in Robotics from Carnegie Mellon University.

00.26

[Applause] [Music] so [Music] kevin lynch is a professor of mechanical

01.00

engineering at northwestern where he directs the interdisciplinary center for robotics and biosystems research at the center includes robots inspired by biology and robots that collaborate intimately with people he leads the number one ranked graduate school in robotics a leading edge expert great teacher he's editor-in-chief of the transactions on robotics uh journal and the author of modern robotics a leading textbook in as you might expect robotics please welcome kevin lynch thanks very much for the introduction tyler and thanks to all of you for being here today um i'm gonna talk a little bit today about our future with robots and for context when i'm talking about robots i'm talking about the where artificial intelligence meets the physical world but to give us a little context for our future with robots i want to talk a little bit about our present with robots and in particular in fulfillment defense agriculture

02.01

medicine entertainment and aerospace so all the stuff that you've been ordering from amazon starts in a warehouse and there's an army of robots that are coordinating with each other to pick up stacks of products and carry them to a person who's picking them off of these shelves and putting them into a box it's important to note though that last step is a person there's not a robot for that part in the military we're often asking our ground troops to carry backpacks with up to 80 pounds of equipment and this is a tremendous burden so the robot you see here the wolf which stands for wheeled offload logistics follower is designed to go with squads and to carry their stuff for them and to follow them into the into the field agriculture automation is poised for huge growth and the example that you see here is using machine vision technology to identify weeds and to selectively spray

03.01

herbicide on those weeds by doing this you're saving herbicide saving money and of course your act being much more ecologically friendly in medicine the intuitive surgical da vinci sp allows a doctor to do precise minimally invasive surgery using three tools and this 3d stereo view that the cameras are giving them and if you've watched the super bowl this year you might have seen boston dynamics robots selling beer for sam adams and what you're seeing in this video not long ago would have been cgi but now these are real robots and finally i have to shout out to nasa bobby was here talking about it earlier he did not mention ingenuity but about a year ago ingenuity became the first craft to have powered flight on another planet mastering the thin atmosphere of mars so these are some of the really impressive things and inspiring things

04.00

that are happening in robotics today and so the question we might ask is what does that mean for the future what's coming next and of course hollywood is good at telling us what might come next and one particularly influential vision is the terminator this came out when i was in high school and i have to admit that i love the movie but as a roboticist is not good for business so when we think about the terminator one reason it has so much resonance is because there are reasons to be concerned about where our future is going with robots and one of those reasons is we see a steady stream of articles like the one you see here that talk about how the robots are coming to replace our jobs and oftentimes you know this serious research gets boiled down to a simple sound bite and the sound bite for this article is every robot you install takes three jobs without talking about the opportunities that are created and then you take serious research like

05.00

this turned into a sound bite and it sort of metastasizes into images that kind of represent a visceral image of the future that people remember and spur these sort of dystopian views of what's coming with robots now robots are going to change the way we work robots are going to impact people's lives and there's no doubt about that the question is does that mean we don't invest in robotics research and of course the answer there is no robots are engines of productivity and i think the real question is how do we use that increased productivity and the improvement in the quality of life that it should bring for all of us and make sure that it's shared as a society and not just benefiting a few so i think there's a very real role for policy to play in our future with robots and unfortunately i don't have time to talk about that today um but what i do want to talk about is some of the technology and i want to talk about why i think that we're not going to see that

06.02

terminator future we're not going to see the future that you see in this new yorker image two reasons i think we are going to come up with the policies that we need second image is that i do not foresee a singularity when our technological progress outpaces our ability to respond to it through through uh through policy and so i want to show this this collection of videos to show you why i don't think that robots are about to replace us so this is from seven years ago a darpa robotics challenge this is robots built by some of the leading labs in the world trying to make robots humanoids do the simplest tasks that any one of us in this room could do and failing spectacularly right so the question is why why are they failing so spectacularly um i mean it's kind of embarrassing as a roboticist to say hey this is the state of the art of robots but the problem is here

07.00

that they're trying to make these robots autonomous right this is all about autonomy how do you make the robot work completely by itself in a human-like environment and that's a really tough nut to crack and that's why when we have robots on factory floors we engineer those factory floors so people are not in them and they're designed for robots robots to put together our cars so while we you know at northwestern we're interested in autonomy just like everybody else but we also embrace the fact that oftentimes robots are best when they're augmenting our capabilities when they're designed to work with people so that we can use the natural human intelligence and awareness and adaptability to new tasks and pair it with you know strength and dexterity that robots can give us so when i think about our future with robots i'm going to use a different image from a different movie this is from the empire strikes back after luke has his lightsaber duel with darth vader gets his hand cut off he's fitted with a prosthetic hand

08.01

that prosthetic hand is a robot and that robot is intimately working with luke to expand his capabilities so i'm going to talk today about our collaborative future with robots and in particular to re achieve that sort of capability that you saw with luke's prosthetic hand it requires a lot of different people working across disciplines so one is mechanical engineering for design and dynamics another is material science to create lightweight materials and soft materials that can interface with people computer science for ai and the algorithms electrical engineering for advanced controls biomedical engineering and our medical school to tell us how to interface with humans safely and we worked very closely with the shirley ryan ability lab which used to be called the rehab institute of chicago and it's one of the leading research hospitals in rehabilitation technology so all of these groups are coming together and contributing to the center for robotics and biosystems and today

09.00

i'm going to tell you a little bit about the work that we're doing to try to achieve this collaborative future and i'm going to focus on three three themes one is collaboration interface and augmentation so cia i don't know if that's scary too but anyway that's easy to remember collaboration interface and augmentation and i want to start by talking about collaboration and a particular kind of collaboration that we call cobots so cobots are collaborative robots they're designed specifically to work with people safely the person provides the intelligence and the sensory awareness and the robot provides the dexterity and strength and so in this case uh the the human in this case is trying to manipulate this bulky pvc pipe assembly and uh perform an assembly action and this would be very difficult to do by one a single person and two people would also struggle here the robots are providing gravity compensation and allowing the person to solve the problem

10.01

effortlessly so we see applications of cobots in factories in assembly uh in warehouses construction and i hope someday even on mars we'll have assistive robots that are helping people to perform their maintenance and building duties another example is what we call shared autonomy and this is work by my colleague brenna argall so one of the of the issues with designing assistive devices for people who suffered a disability is that that very disability may prevent them from using the device properly so in this case we have somebody who is trying to drive a wheelchair somebody with a high spinal cord injury and what we want to do is we want to create a robotic wheelchair that can augment their control commands and in this case help them drive through a doorway without collision so the idea is called shared autonomy the human is providing input the robot is providing input and together they

11.01

collaborate so that the robot can infer the intent from the human but can make sure that tremors or other sorts of unintended control signals do not turn into a collision and here's one more example of collaboration this is the kidney assist by my colleagues michael peshkin and ed colgate so this is a rehabilitate rehabilitation device for people learning gait again after a stroke so this device provides variable weight support while the person trains on a treadmill and allows them to be aggressive in their training because they don't have to worry about falling if they fall the device will catch them it also helps the the physical therapist because now they're avoiding the repetitive stress injuries that might come from having to help this person physically so i'm going to move on to interface when i talk about interface i'm talking about the physical or signal connections between the robot and the human and one kind of interface you might have heard of is brain machine

12.00

interfaces where we actually surgically implant an electrode array in the brain to try to figure out what the person is trying to do and interpret that to control a robot now that's obviously a very invasive procedure so today i want to talk about a different approach so this is work by john rogers developing flexible wireless skin like circuits okay so most circuits were familiar with the ones in your phone they live on rigid chips that are designed to be permanent this is designed to laminate smoothly on your skin and to be washed off when you're finished with it so what you see here is somebody's had a transhumeral amputation and now they have this wireless circuit laminated onto their skin that can connect emg signals figure out what the muscle activations are doing and even stimulate the skin and that's very important for doing things like controlling a prosthetic like you see in the other image there's other applications for skin like

13.00

flexible electronics and here's one in the newborn intensive care unit so here you know in the intensive care unit usually the babies are being monitored and wired and that wiring prevents mother and father from picking up the baby and interacting so here there's a wireless electrocardiogram sensor monitoring the baby's heartbeat and allows for that skin to skin contact between the parents and the child so i want to talk a little bit about augmentation and in particular getting back to intuitive control of a prosthetic in a procedure that was pioneered by my colleague todd kaiken so what you see here is the normal musculature of the arm and the chest and of course we're controlling the contractions of our muscles by nerves that are carrying signals from our brain down to the muscles and after an amputation in this case at the shoulder the nerves are severed but a doctor can perform what's called a

14.00

targeted muscle re-innervation surgery where those nerves are actually rerouted and re-implanted in the chest now what will happen is those nerves will grow into the chest and now what you have is when a person thinks about controlling the arm that they no longer have they're creating muscle activations in their chest and that bioamplification from this growth process allows those signals to be picked up by surface emgs of the kind that we just saw in the previous slides and now we can use those signals to control a prosthetic arm so the idea is the person thinks about controlling their arm their muscle and their chest contracts the emgs pick it up the arm moves and so here's a subject using a prosthetic arm with tmr surgery she's a transhumeral amputation patient and here you can see that she's just thinking about moving her arm around the signals are being picked up by the emg and she's able to control this prosthetic arm from deca research

15.03

another fascinating thing about this is because of those same nerves that are control that used to control the muscles of your arms are also carrying sensory signals if you stimulate the chest or in her case the arm in the same area she can perceive that as contact at her fingertips so now if you can put tactile sensors on the end of this robot arm and then you can carry those signals back to stimulate her chest or her arm she can actually feel what's at the end of her prosthetic i want to talk about one more example of augmentation and this is for people who suffered a spinal cord injury so now they don't need a mechanical prosthetic but they've lost the connection from the brain to the muscles that control the arm so for these patients we can perform a functional electrical stimulation surgery or fes where you implant the stimulator pack in the abdomen and that stimulates the muscles of the back and the chest and the shoulder and

16.00

the arm to cause motion of the arm so we call this a neuroprosthetic instead of a traditional prosthetic now we can talk to that stimulator pack through an rf link through the skin and an external computer can stimulate the muscles and then if we close the loop with a brain machine interface of some kind we can take what the person is thinking and turn it into action at the arm the whole idea is to try to provide more autonomy to people who suffered spinal cord injury so here's a video of us calibrating this neuroprosthetic so you know the arm is a very complicated device it's got lots of muscles and lots of nerves so what we're doing here now is we're moving her arm around while also stimulating the muscles and we're building up a model to map how our stimulation goes to forces generated at the arm and once we have that model now in this top right video you can see purely fes control it's a lot shakier

17.02

because there's some limitations to this approach but the idea is that she should be able to think about reaching out grabbing a piece of food and bringing it to her mouth it's all about trying to restore some activities of daily living so these are some of the technologies and collaboration interface and augmentation that we're working on in our center some of these are already beginning to have an impact on people's lives so i think of this as a near future version of our future with robots but it's also fun to think about further down the road where are we going and the way that i think about it is that robots and people are going to be co-evolving right so while biological evolution may continue the pace of technological in evolution will far outpace it and so the question is where are we going are we going to achieve some sort of robot human hybrid um and i think thinking about that we can take as an example natural evolution from 400 million years

18.01

ago so here's a here's a fish in the ocean 400 million years ago life is tough it's crowded right and life on land is starting to look better right it's not as crowded not as much competition so back then fish evolved large eyes and then the question is what do they do with those eyes and this is work by my colleague malcolm mciver who saw right at that transition about 400 million years ago the eye sockets of the fish start to increase dramatically so what can you do with that well one thing is now your field of vision has dramatically increased right if you can only see out this far there's no reason to plan anything right i'm going to react all the time to whatever comes into my visual field but if i can see the distance now it makes sense to see in the future you can think about planning so here's our fish who's identified a a prey insect in the distance wants to figure out how to go get it

19.00

and if it's able to think ahead in the future it knows that going straight ahead is going to put it into the field of view of that insect the insect's going to escape on the other hand if it took a different path it's going to be successful so now there's value to devoting evolutionary resources to getting foresight and this is called the natural evolution of prospective cognition thinking about what your actions now mean for the future now we've come a long way since this fish i think but we still have difficulty understanding where we're going we still have difficulty making decisions now that will affect us 10 years 100 years in the future and so the question is are we headed towards a technological evolution of prospective cognition where now we're not only physically augmenting people but we're also cognitively augmenting people there's a great video uh your short-sighted inner fish you can look it

20.00

up on youtube it's a great example of science communication you can learn a bit more about this concept so i'd just like to close by thanking my colleagues at the center for robotics and biosystems for their work on collaboration interface and augmentation toward our collaborative future with robots and i'd like to thank you the audience for spending some time with me today thank you [Applause]